| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |

- ogg

- Installation

- Oracle

- goldengate

- adg

- oracle goldengate

- 오라클

- 오라클구조

- SILENTMODE

- 데이터베이스

- linux

- 데이터가드

- 디비투

- 19c

- oracle installation

- 오라클아키텍쳐

- ORACLE19C

- recovery

- ActiveDataGuard

- backup

- Database

- 백업

- rman

- Oracle 19c

- SSH

- oracle recovery

- OracleGoldenGate

- 오지지

- DataGuard

- 오라클설치

- Today

- Total

DoubleDBDeep

[Kafka] Mysql -> Oracle Apache Spark 통해 Data 이관 본문

작업환경 (OS/ Kernel)

Linux 7.9 5.4.17-2102.201.3.el7uek.x86_64

Apache Spark Install

1. JDK 설치

https://yum.oracle.com/repo/OracleLinux/OL7/9/base/x86_64/index.html

Oracle Linux 7 (x86_64) Update 9 | Oracle, Software. Hardware. Complete.

yum.oracle.com

여기서 java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64.rpm 를 받아 linux server의 디렉토리에 넣어둠

rpm 설치

cd /media/sf_01.oracle/2.RPM/KAFKA

rpm -ivh java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64.rpm

root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# rpm -ivh java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64.rpm

warning: java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ################################# [100%]

package java-1.8.0-openjdk-1:1.8.0.262.b10-1.el7.x86_64 is already installed

root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# rpm -ivh java-1.8.0-openjdk-devel-1.8.0.262.b10-1.el7.x86_64.rpm

warning: java-1.8.0-openjdk-devel-1.8.0.262.b10-1.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:java-1.8.0-openjdk-devel-1:1.8.0.################################# [100%]

확인

java -version

root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# java -version

openjdk version "1.8.0_262"

OpenJDK Runtime Environment (build 1.8.0_262-b10)

OpenJDK 64-Bit Server VM (build 25.262-b10, mixed mode)

javac -version

root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# javac -version

javac 1.8.0_262

2. Python 설치

yum install python3.x86_64root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# yum install python3.x86_64

Loaded plugins: langpacks, ulninfo

mysql-connectors-community | 2.6 kB 00:00:00

mysql-tools-community | 2.6 kB 00:00:00

mysql80-community | 2.6 kB 00:00:00

ol7_UEKR6 | 3.0 kB 00:00:00

ol7_latest | 3.6 kB 00:00:00

(1/8): mysql-connectors-community/x86_64/primary_db | 109 kB 00:00:00

(2/8): mysql-tools-community/x86_64/primary_db | 98 kB 00:00:00

(3/8): mysql80-community/x86_64/primary_db | 278 kB 00:00:00

(4/8): ol7_UEKR6/x86_64/updateinfo | 1.1 MB 00:00:01

(5/8): ol7_latest/x86_64/group_gz | 136 kB 00:00:00

(6/8): ol7_latest/x86_64/updateinfo | 3.6 MB 00:00:03

(7/8): ol7_UEKR6/x86_64/primary_db | 69 MB 00:00:07

(8/8): ol7_latest/x86_64/primary_db | 49 MB 00:00:13

Resolving Dependencies

--> Running transaction check

---> Package python3.x86_64 0:3.6.8-21.0.1.el7_9 will be installed

--> Processing Dependency: python3-libs(x86-64) = 3.6.8-21.0.1.el7_9 for package: python3-3.6.8-21.0.1.el7_9.x86_64

--> Processing Dependency: python3-pip for package: python3-3.6.8-21.0.1.el7_9.x86_64

--> Processing Dependency: python3-setuptools for package: python3-3.6.8-21.0.1.el7_9.x86_64

--> Processing Dependency: libpython3.6m.so.1.0()(64bit) for package: python3-3.6.8-21.0.1.el7_9.x86_64

--> Running transaction check

---> Package python3-libs.x86_64 0:3.6.8-21.0.1.el7_9 will be installed

---> Package python3-pip.noarch 0:9.0.3-8.0.3.el7 will be installed

---> Package python3-setuptools.noarch 0:39.2.0-10.0.1.el7 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

==============================================================================================================================================================================================================

Package Arch Version Repository Size

==============================================================================================================================================================================================================

Installing:

python3 x86_64 3.6.8-21.0.1.el7_9 ol7_latest 70 k

Installing for dependencies:

python3-libs x86_64 3.6.8-21.0.1.el7_9 ol7_latest 7.0 M

python3-pip noarch 9.0.3-8.0.3.el7 ol7_latest 1.6 M

python3-setuptools noarch 39.2.0-10.0.1.el7 ol7_latest 628 k

Transaction Summary

==============================================================================================================================================================================================================

Install 1 Package (+3 Dependent packages)

Total download size: 9.3 M

Installed size: 47 M

Is this ok [y/d/N]: y

Downloading packages:

warning: /var/cache/yum/x86_64/7Server/ol7_latest/packages/python3-3.6.8-21.0.1.el7_9.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY ] 0.0 B/s | 0 B --:--:-- ETA

Public key for python3-3.6.8-21.0.1.el7_9.x86_64.rpm is not installed

(1/4): python3-3.6.8-21.0.1.el7_9.x86_64.rpm | 70 kB 00:00:00

(2/4): python3-pip-9.0.3-8.0.3.el7.noarch.rpm | 1.6 MB 00:00:00

(3/4): python3-setuptools-39.2.0-10.0.1.el7.noarch.rpm | 628 kB 00:00:00

(4/4): python3-libs-3.6.8-21.0.1.el7_9.x86_64.rpm | 7.0 MB 00:00:01

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total 4.6 MB/s | 9.3 MB 00:00:02

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-oracle

Importing GPG key 0xEC551F03:

Userid : "Oracle OSS group (Open Source Software group) <build@oss.oracle.com>"

Fingerprint: 4214 4123 fecf c55b 9086 313d 72f9 7b74 ec55 1f03

Package : 7:oraclelinux-release-7.9-1.0.9.el7.x86_64 (@anaconda/7.9)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-oracle

Is this ok [y/N]: y

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Warning: RPMDB altered outside of yum.

Installing : python3-pip-9.0.3-8.0.3.el7.noarch 1/4

Installing : python3-libs-3.6.8-21.0.1.el7_9.x86_64 2/4

Installing : python3-3.6.8-21.0.1.el7_9.x86_64 3/4

Installing : python3-setuptools-39.2.0-10.0.1.el7.noarch 4/4

Verifying : python3-setuptools-39.2.0-10.0.1.el7.noarch 1/4

Verifying : python3-pip-9.0.3-8.0.3.el7.noarch 2/4

Verifying : python3-3.6.8-21.0.1.el7_9.x86_64 3/4

Verifying : python3-libs-3.6.8-21.0.1.el7_9.x86_64 4/4

Installed:

python3.x86_64 0:3.6.8-21.0.1.el7_9

Dependency Installed:

python3-libs.x86_64 0:3.6.8-21.0.1.el7_9 python3-pip.noarch 0:9.0.3-8.0.3.el7 python3-setuptools.noarch 0:39.2.0-10.0.1.el7

Complete!

확인

root@eoks01:/media/sf_01.oracle/2.RPM/KAFKA# python3 --version

Python 3.6.8

root@eoks01:/root# python3

Python 3.6.8 (default, Nov 8 2023, 18:52:33)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44.0.3)] on linux

Type "help", "copyright", "credits" or "license" for more information.

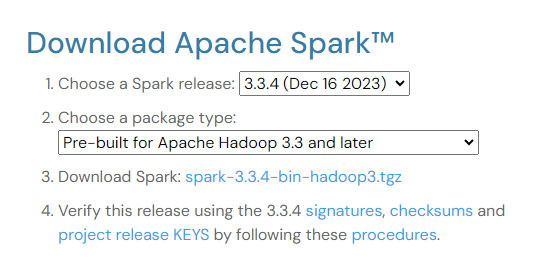

3. Apache Spark 설치

https://spark.apache.org/downloads.html

Downloads | Apache Spark

Download Apache Spark™ Choose a Spark release: Choose a package type: Download Spark: Verify this release using the and project release KEYS by following these procedures. Note that Spark 3 is pre-built with Scala 2.12 in general and Spark 3.2+ provides

spark.apache.org

설치파일 압축해제

cd /media/sf_01.oracle/2.RPM/KAFKA

tar -xvf spark-3.3.4-bin-hadoop3.tgz /media

cd /media

mv spark-3.3.4-bin-hadoop3 spark-3.3.4

환경변수 설정

vi ~/.bash_profile

##########################################

# 4. SPARK

##########################################

export SPARK_HOME=/media/spark-3.3.4

export PYSPARK_PYTHON=python3

export PATH=$PATH:$SPARK_HOME/bin

alias cds='cd $SPARK_HOME'

기본 연결 테스트

pyspark --version

root@eoks01:/media# pyspark --version

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.3.4

/_/

Using Scala version 2.12.15, OpenJDK 64-Bit Server VM, 1.8.0_262

Branch HEAD

Compiled by user centos on 2023-12-08T19:26:29Z

Revision 18db204995b32e87a650f2f09f9bcf047ddafa90

Url https://github.com/apache/spark

Type --help for more information.

python3 --version

root@eoks01:/media# python3 --version

Python 3.6.8

pyspark

root@eoks01:/media# pyspark

Python 3.6.8 (default, Nov 8 2023, 18:52:33)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44.0.3)] on linux

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

24/01/26 08:27:24 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.3.4

/_/

Using Python version 3.6.8 (default, Nov 8 2023 18:52:33)

Spark context Web UI available at http://eoks01:4040

Spark context available as 'sc' (master = local[*], app id = local-1706225246376).

SparkSession available as 'spark'.

>>> quit()

기본 설정

cd /media/spark-3.3.4/conf

cp spark-defaults.conf.template spark-defaults.conf

cp spark-env.sh.template spark-env.sh

cp log4j2.properties.template log4j2.properties

vi log4j2.properties

#추가

log4j.rootCategory=WARN, console

예제 실행 1

lines =sc.textFile("README.md")

lines.count()

lines.first()

pythonLines = lines.filter(lambda line : "Python" in line)

pythonLines.first()

root@eoks01:/media/spark-3.3.4# pyspark

Python 3.6.8 (default, Nov 8 2023, 18:52:33)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44.0.3)] on linux

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

24/01/26 08:34:43 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.3.4

/_/

Using Python version 3.6.8 (default, Nov 8 2023 18:52:33)

Spark context Web UI available at http://eoks01:4040

Spark context available as 'sc' (master = local[*], app id = local-1706225686096).

SparkSession available as 'spark'.

>>> lines =sc.textFile("README.md")

>>> lines.count()

124

>>> lines.first()

'# Apache Spark'

>>> pythonLines = lines.filter(lambda line : "Python" in line)

>>> pythonLines.first()

'high-level APIs in Scala, Java, Python, and R, and an optimized engine that'

>>> quit()

예제 실행 2

vi test.py

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local").setAppName("test")

sc = SparkContext(conf=conf)

lines =sc.textFile("/media/spark-3.3.4/README.md")

print("lines.count() :", lines.count() )

print("lines.first() :", lines.first() )

pythonLines = lines.filter(lambda line : "Python" in line)

print("pythonLines.first() :", pythonLines.first())

+ Java 환경변수 설정

readlink -f /usr/bin/java

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64/bin/javac

-> $JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

# JAVA

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib

-- JDBC Connector 다운

https://dev.mysql.com/downloads/connector/j/

> Platform Independent

> mysql-connector-j-8.3.0.tar.gz 다운로드 > 필요 디렉토리에 넣어둠

cd /media/sf_01.oracle/8.mysql

tar -xvf mysql-connector-j-8.3.0.tar.gz

cd mysql-connector-j-8.3.0

cp -ap mysql-connector-j-8.3.0.jar $JAVA_HOME/lib

cp -ap mysql-connector-j-8.3.0.jar $SPARK_HOME/jars

root@eoks01:/usr/lib/mysql-connector-j-8.3.0# javap com.mysql.jdbc.Driver

Compiled from "Driver.java"

public class com.mysql.jdbc.Driver extends com.mysql.cj.jdbc.Driver {

public com.mysql.jdbc.Driver() throws java.sql.SQLException;

static {};

}

root@eoks01:/var/lib/mysql# service mysqld start

Redirecting to /bin/systemctl start mysqld.service

root@eoks01:/var/lib/mysql# service mysqld status

Redirecting to /bin/systemctl status mysqld.service

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2024-02-05 06:56:12 KST; 3s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 17842 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 17892 (mysqld)

Status: "Server is operational"

Tasks: 38

Memory: 395.5M

CGroup: /system.slice/mysqld.service

└─17892 /usr/sbin/mysqld

Feb 05 06:56:09 eoks01.localdomain systemd[1]: Starting MySQL Server...

Feb 05 06:56:12 eoks01.localdomain systemd[1]: Started MySQL Server.

4. mysql - Spark Connect

사용 예제 참조

https://jyoondev.tistory.com/95

스파크 튜토리얼 - (7) 스파크 SQL

Spark SQL 스파크는 여러 방면에서 SQL을 사용할 수 있도록 지원합니다. 이전 포스팅들에서는 csv나 json파일을 통해 DataFrame을 만드는 방법을 잠깐 설명했습니다. 이 DataFrame 기능이 곧 Spark SQL에 포함

jyoondev.tistory.com

pyspark

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession \

.builder.config("spark.jars", "/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64/mysql-connector-j-8.3.0.jar")\

.master("local") \

.appName("spark-mysql") \

.getOrCreate()

jdbc = spark.read.format("jdbc")\

.option("url", "jdbc:mysql://localhost/mysql")\

.option("driver", "com.mysql.cj.jdbc.Driver")\

.option("user", "spark")\

.option("password", "welcome1")\

.option("dbtable", "orders")\

.load()

jdbc.show()

-> Mysql 에 있는 orders Table의 Data(Spark dataframe에 담긴)가 출력됨.

5. Mysql to Oracle

Oracle에 Table Meta 생성

-> 지금은 1건이구 타입도 간단해서 ㄱㅊ은데 .. 많아지면 어떻게 뽑아낼건지 ? ? ?

create table rpmmgr.orders (

ORDERID number NOT NULL,

CustomerID number NOT NULL,

EmployeeID number NOT NULL,

OrderDate DATE NOT NULL,

ShipperID number NOT NULL,

PRIMARY KEY (ORDERID)

);

Spark Connect Oracle

spark = SparkSession \

.builder \

.appName("spark-oracle") \

.config("spark.jars", "ojdbc8.jar") \

.getOrCreate()

jdbcDF = spark.read \

.format("jdbc") \

.option("url", "jdbc:oracle:thin:@192.168.56.121:1521/OREMDB") \

.option("dbtable", "ORDERS") \

.option("user", "USERID") \

.option("password", "PASSWORD") \

.load()

jdbcDF.show()

+-------+----------+----------+---------+---------+

|ORDERID|CUSTOMERID|EMPLOYEEID|ORDERDATE|SHIPPERID|

+-------+----------+----------+---------+---------+

+-------+----------+----------+---------+---------+

Spark df (mysql data) to Oracle (jdbcDF)

jdbc = jdbc.write.format("jdbc").options(

url="jdbc:oracle:thin:@192.168.56.121:1521/OREMDB",

driver = "oracle.jdbc.driver.OracleDriver",

dbtable = "ORDERS",

user="USERID",

password="PASSWORD").mode('append').save()

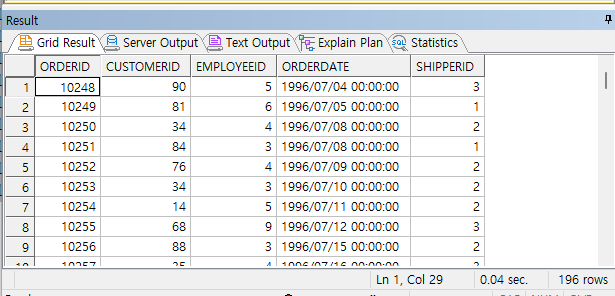

ORACLE DataFrame 확인

>>> jdbcDF.show()

+----------------+-------------+------------+-------------------+------------+

| ORDERID| CUSTOMERID| EMPLOYEEID| ORDERDATE| SHIPPERID|

+----------------+-------------+------------+-------------------+------------+

|10248.0000000000|90.0000000000|5.0000000000|1996-07-04 00:00:00|3.0000000000|

|10249.0000000000|81.0000000000|6.0000000000|1996-07-05 00:00:00|1.0000000000|

|10250.0000000000|34.0000000000|4.0000000000|1996-07-08 00:00:00|2.0000000000|

|10251.0000000000|84.0000000000|3.0000000000|1996-07-08 00:00:00|1.0000000000|

|10252.0000000000|76.0000000000|4.0000000000|1996-07-09 00:00:00|2.0000000000|

|10253.0000000000|34.0000000000|3.0000000000|1996-07-10 00:00:00|2.0000000000|

|10254.0000000000|14.0000000000|5.0000000000|1996-07-11 00:00:00|2.0000000000|

|10255.0000000000|68.0000000000|9.0000000000|1996-07-12 00:00:00|3.0000000000|

|10256.0000000000|88.0000000000|3.0000000000|1996-07-15 00:00:00|2.0000000000|

|10257.0000000000|35.0000000000|4.0000000000|1996-07-16 00:00:00|3.0000000000|

|10258.0000000000|20.0000000000|1.0000000000|1996-07-17 00:00:00|1.0000000000|

|10259.0000000000|13.0000000000|4.0000000000|1996-07-18 00:00:00|3.0000000000|

|10260.0000000000|55.0000000000|4.0000000000|1996-07-19 00:00:00|1.0000000000|

|10261.0000000000|61.0000000000|4.0000000000|1996-07-19 00:00:00|2.0000000000|

|10262.0000000000|65.0000000000|8.0000000000|1996-07-22 00:00:00|3.0000000000|

|10263.0000000000|20.0000000000|9.0000000000|1996-07-23 00:00:00|3.0000000000|

|10264.0000000000|24.0000000000|6.0000000000|1996-07-24 00:00:00|3.0000000000|

|10265.0000000000| 7.0000000000|2.0000000000|1996-07-25 00:00:00|1.0000000000|

|10266.0000000000|87.0000000000|3.0000000000|1996-07-26 00:00:00|3.0000000000|

|10267.0000000000|25.0000000000|4.0000000000|1996-07-29 00:00:00|1.0000000000|

+----------------+-------------+------------+-------------------+------------+

only showing top 20 rows

Oracle에서 확인

select * from orders;

ORDERID CUSTOMERID EMPLOYEEID ORDERDATE SHIPPERID

---------- ---------- ---------- --------- ----------

10248 90 5 04-JUL-96 3

10249 81 6 05-JUL-96 1

10250 34 4 08-JUL-96 2

10251 84 3 08-JUL-96 1

10252 76 4 09-JUL-96 2

10253 34 3 10-JUL-96 2

10254 14 5 11-JUL-96 2

10255 68 9 12-JUL-96 3

10256 88 3 15-JUL-96 2

10257 35 4 16-JUL-96 3

10258 20 1 17-JUL-96 1

10259 13 4 18-JUL-96 3

10260 55 4 19-JUL-96 1

10261 61 4 19-JUL-96 2

10262 65 8 22-JUL-96 3

10263 20 9 23-JUL-96 3

10264 24 6 24-JUL-96 3

10265 7 2 25-JUL-96 1

10266 87 3 26-JUL-96 3

10267 25 4 29-JUL-96 1

...

196건 data import 확인

'ETC > 도전' 카테고리의 다른 글

| Linux 64bit | Oracle database 19c Gateway for DRDA 설치 (DB2<-> ORACLE DBLINK 연결) (0) | 2025.03.20 |

|---|---|

| [DB2] 설치 (0) | 2025.03.20 |

| [ORACLE] CRS-6705 | 업그레이드 시 grid Software 버전이 active 버전과 다른 오류 (0) | 2023.03.24 |

| 아크로니스 Cyber Protect Home Office 사용기 2 (복구.. 실패 .. ^^) (0) | 2023.02.28 |

| 아크로니스 Cyber Protect Home Office 사용기 (0) | 2022.11.30 |